Social media has become a key tool for spreading political influence, with Russian bots playing a significant role. Using artificial intelligence (AI), these bots have disrupted democratic systems, deepened divisions, and manipulated public opinion worldwide. From the 2016 U.S. presidential election to the ongoing war in Ukraine, they have been used to spread disinformation.

A study by Rebecca Marigliano, Lynnette Hui Xian NG, and Kathleen Carley reveals how Russian bots manipulate narratives to fuel polarisation and undermine democratic values. The research highlights that bots frame actions like Russia’s invasion of Ukraine as justified while discrediting Ukraine, distorting public perception and weakening institutional trust. The study also found that bots strengthen one-sided views, suppress opposing perspectives, and deepen divisions within online communities. Their activity spikes during key moments in the Ukraine conflict, leveraging algorithms to dominate social media trends and align with significant events. This pattern was also seen during the 2016 U.S. Presidential Election.

Interference with the 2016 U.S. Election

In 2018, X (formerly Twitter) removed 200,000 tweets linked to Russian bots operated by the Internet Research Agency (IRA). These bots spread fake stories, including attacks on Democrats and impersonations of Black Lives Matter activists, as part of a campaign to influence the 2016 U.S. election.

Russian campaigns used current events like terrorist attacks and political debates to push propaganda. Fake accounts interacted with U.S. politicians, media figures, and celebrities, increasing political divisions. Twitter suspended 3,814 accounts linked to the IRA, erasing their tweets, which some critics saw as "erasing history."

NBC News analysed the tweets and found Russian accounts impersonating Americans, and spreading racist and conspiracy-driven content. The data showed ongoing Russian interference, even during U.S. midterms. NBC News made the dataset available for public and research use.

Visual Representation

Figure 1: Percentage of Russian Bot Tweets Containing Political Keywords ('Trump' or 'Hillary') by Each User

Of the ten users and 11,455 tweets recovered by NBC, 3,784 mentioned the keywords ‘Trump’ or ‘Hillary’. Each user tweeted about the election, and users 5, 6, 7, and 10 had more than 50% of tweets mentioning the politicians. Users 1, 3, 4 and 9 show moderate activity with mixed non-political content in their tweets to appear more authentic while promoting political messages. User 2 and User 8 have the lowest percentages focused on non-political content, possibly serving as filler or to avoid detection as bots.

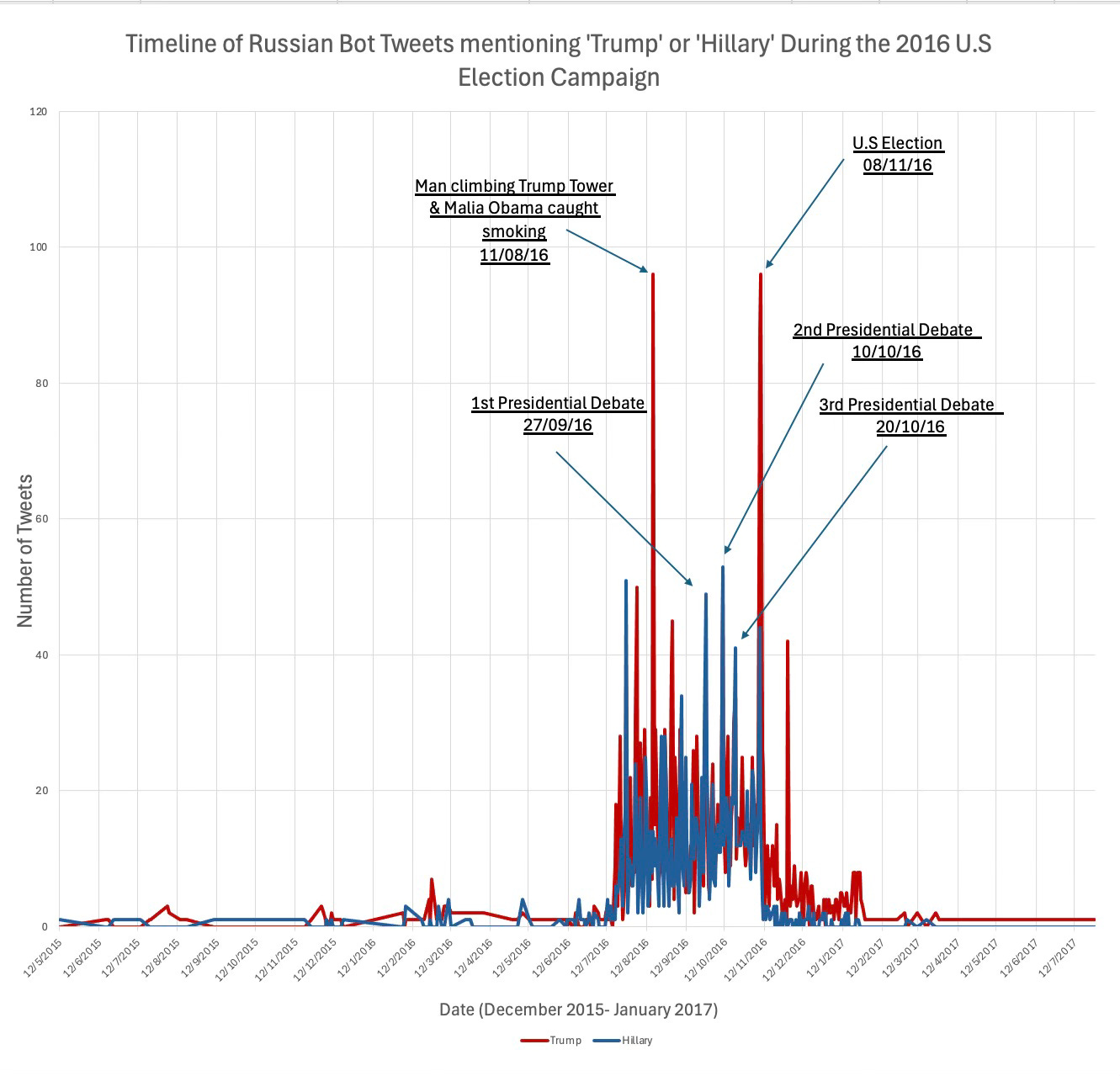

Figure 2: Timeline of Russian Bot Tweets Mentioning 'Trump' or 'Hillary' During the 2016 U.S Election Campaign

The graph shows Russian bot activity during the 2016 U.S. Presidential Election, with tweet spikes around significant events. Activity remained low until mid-2016, then sharply increased in July/August 2016 as the election approached.

The most significant spikes occurred during the First, Second, and Third Presidential Debates (Sept-Oct 2016), with tweets about Hillary outnumbering those about Trump. The highest peak was on Election Day (Nov 8, 2016), with a surge in mentions of Trump, suggesting a targeted effort to boost his visibility. Other events, like the man climbing Trump Tower (Aug 11, 2016) and the Malia Obama controversy, also triggered spikes in activity. After the election, activity dropped, suggesting the bots were focused on influencing the election outcome.

Ukraine War

Russia's use of bots expanded during the war in Ukraine. On July 9, 2024, the U.S. Justice Department revealed that it had identified 968 accounts linked to Russian bot farms. These bots promoted divisive narratives, including justifying Russia's invasion of Ukraine and distorting Eastern European history while framing the conflict as part of a "New World Order."

The report notes that AI tools like the program Meliorator helped create fake personas that seemed authentic, making the bots more convincing. The operation, organised by RT News and funded by the Kremlin through the Federal Security Service (FSB), violated U.S. laws, including sanctions and money laundering regulations.

The bots impersonated U.S. citizens, lending credibility to propaganda targeting countries like the U.S., Ukraine, Germany, and Poland. This further polarised users, who began unfollowing those sharing bot-generated content. The bots also amplified far-right and minority movements, distorting public discourse.

Nina Jankowicz, head of the American Sunlight Project, a non-profit organisation focused on combating disinformation, told the BBC that a Russia-linked operation's use of AI to create fake accounts was unsurprising. She explained that creating fake accounts was one of the most time-consuming tasks in the past, but technological advancements have made this process much smoother.

Jankowicz also pointed out that the operation seems to have been stopped before it could gain significant traction.

"Artificial intelligence is now clearly part of the disinformation arsenal,” she states.

The increasing sophistication of Russian bot operations shows the evolving challenges of combating digital disinformation. From influencing the 2016 U.S. presidential election to escalating polarisation during the Ukraine war, these campaigns exploit advanced AI tools to manipulate narratives and create division. As governments and social media platforms work to address these threats, the persistence of such tactics highlights the critical need for ongoing vigilance, transparency, and stronger measures to protect democratic discourse in the digital age.