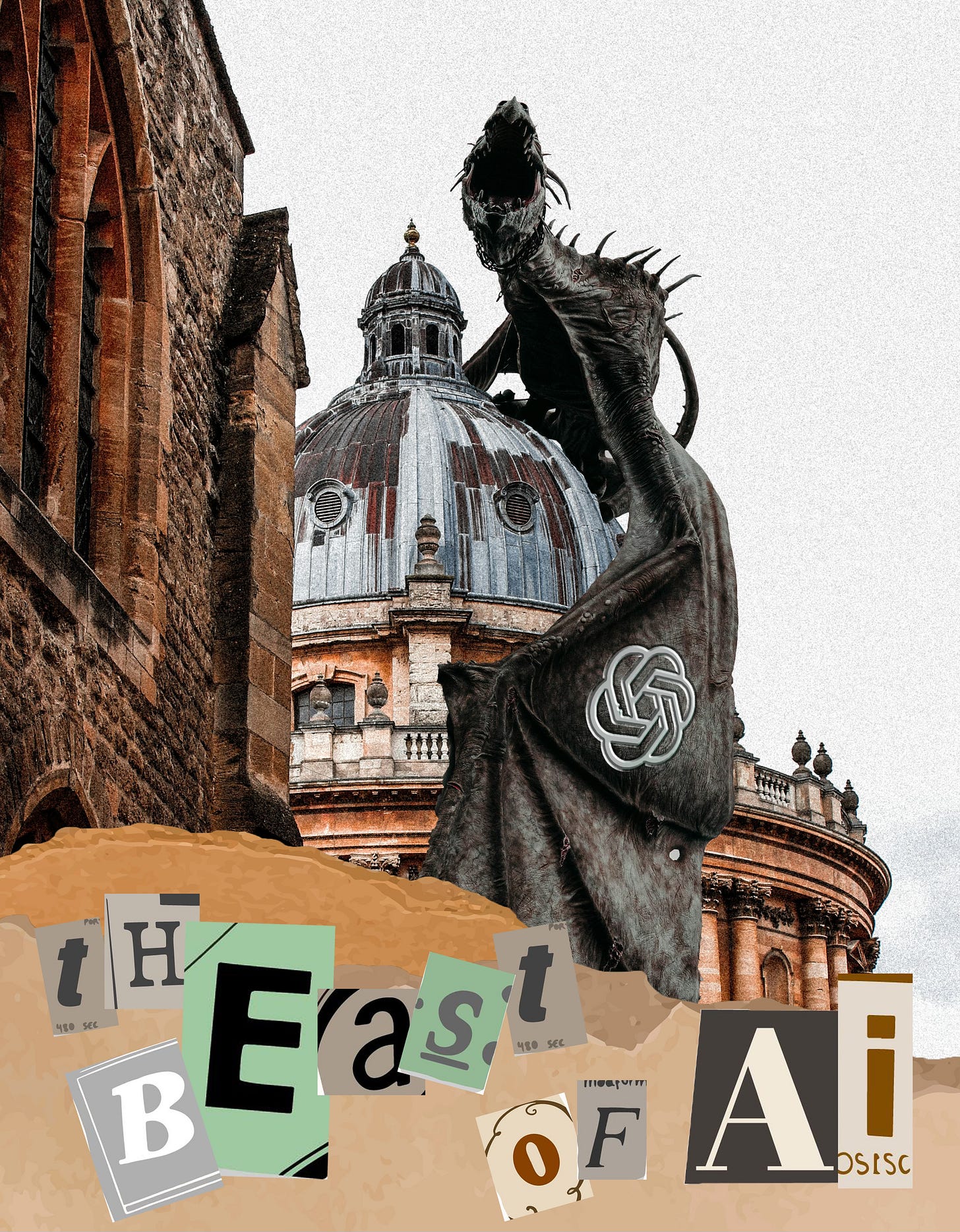

Taming the 'AI Beast' in Academia

Whether we like it or not, AI has already made a nest in the walls of universities

From Ban to Fan

Just last month, Oxford University announced its collaboration with OpenAI ‘to advance research and education’. A bit of a 180 degree move, compared to their initial reaction in the beginning of 2023, shortly after the ChatGPT explosion, when threatened professors frowned upon the tool and banned its use altogether. However, now ChatGPT creators are offering the university to help digitise the Bodleian libraries and implementing ChatGPT Edu tool to help academics and staff with so-called ‘admin burdensome’ tasks, research, grading and feedback. It will also be available to students for summarization and ideation. The commitment of $50m in funding for research grants by the company doesn’t sound too bad either. Overall, it’s pretty exciting news for the academic world, as it would provide much easier access to thousands of archaic resources hidden in the Bodleian chambers, as well as facilitate deeper research in the artificial intelligence fields. But every coin has two sides, doesn’t it?

Students’ New Best Friend

It’s 10pm and you just realised you have an [insert any subject here] essay due the next day. The life drains out of you as you make copious amounts of coffee and brace yourself for the longest night in your life. A scenario that would make even old graduates quake in their boots is an intrinsic student experience. But it might not be that familiar to students nowadays. A recent study by The Higher Education Policy Institute (HEPI) found that a whopping 92% of students turn to ChatGPT to save the day. A quick chat with a business management student on Oxford Brookes University campus further proves that students turn to AI for help with assignments.

“I mainly use AI to explain keywords, as the academic language can be tricky to understand. It’s also useful for me to brainstorm ideas, edit, and jumpstart the work needed for assignments.”

Looks like AI became an everyday tool in students’ life, not only a saviour in dire situations. More than half of the students that participated in the study said that they used AI to explain concepts and 48% asked it for relevant article summaries. A number that tripled between 2024 and 2025, was students, who used AI-generated or edited text directly in their work. While Turitin, the most widely used software in UK universities to detect plagiarism, did roll out an AI detection tool, however some universities like Brookes do not use it in fear of false positives. However, they do require students to fill out a form detailing how AI has been used when completing assignments. Currently, this academic love triangle functions completely based on trust, which could be easy to abuse.

From the Lens of Academics

Following up on the previously mentioned study, a couple of professors painted quite a dreadful picture of the ‘AI beast’. In a letter to The Guardian, professor Andrew Moran called AI ‘the ultimate convenience food’ and fretted over how it created the ‘“tinderification” of knowledge’. He and Dr Ben Wilkinson both noted the vampiric impact of AI on humanities and social sciences, sucking out critical thinking, a crucial aspect in these disciplines.

However, Dr Leander Reeves, a lecturer in Oxford Brookes University showed no fear of artificial intelligence. She noted that she hasn’t used it neither in her own research nor cared for in terms of grading and feedback.

“I feel like students deserve my full attention. I want them to know that all this (a.i. feedback) is my interpretation and my thoughts, which I do hope will be useful and valuable to them”.

As an academic in the field of humanities and cultural studies, she fiercely encourages her scholars to explore their own angles and collaborate with each other. As we become more and more reliant on tech, she highlights that it’s important to weigh what we gain and lose by using it. Dr Reeves has also observed that sometimes when consulted, AI tends to “over polish and flatten” academic ideas, making them lose the most exciting part of knowledge - the human observation and input. She empathised with the students that have or been tempted to use AI in university, as she understood that some of them might be approaching education as “customers”, aiming to acquire a certificate or “scholars”, focused on bettering their understanding of the world. For her, the biggest giveaway for AI-generated text is that “while it is well written, it doesn’t actually say anything, it’s like reading the back of a shampoo bottle”.

Taming Takes Time

While there is still lots of fear about how AI will affect not only academia, but creative and other industries, the initial shock and excitement of ChatGPT has worn off. I believe we have entered a new phase in terms of AI - experimentation. A perfect example of this is a new type of school in the United States, called Alpha School, priding themselves in providing an AI-curated curriculum that can be completed in only 2 hours per day. The co-founder of the school, Mckenzie Price notes that it doesn’t work for all of the kids, mostly 80-90% of them. AI is uprooting many factors of our lives, which is understandably scary and uncomfortable. But maybe if we allow ourselves the space to ethically experiment with this new exciting toy, it might be possible to reach a human and ‘AI beast’ homeostasis.