ChatCBT? - I used ChatGPT as a therapist for a week and here’s what I found

As Mental Health Awareness Week approaches, deadline week is coming up and the cost of living crisis is burning now more than ever, I question whether AI can be there for me when my therapist can't.

The UK government is failing to get a ‘firmer grip’ on the mental health crisis, everyone is struggling, but there’s nobody there to offer help. However, AI exists and it’s closer to us than ever. But is it actually able to act as your therapist in times of crisis?

I’m not the only one asking that question. Recent research testing out cognitive-behavioural therapeutic (CBT) apps like Woebot has found out that robots might not be as bad as we might think. In fact, after testing it out for two weeks on 70 participants aged 18 to 28 years old, the results proved to be positive. The report concluded that ‘those in the Woebot group significantly reduced their symptoms of depression over the study period’, say researchers Kathleen Kara Fitzpatrick, Alison Darcy and Molly Vierhile.

Their study also pointed out that most diagnoses of anxiety and depression are given at the early stages of adulthood, with the average age being 24, and symptoms being even more prevalent among students.

Despite a multitude of resources being widely available to help alleviate mental health issues, including on-campus counselling, actively seeking help could still prove to be difficult for many. A study by the National Library of Medicine shows that 75% of college students shy away from asking a therapist for help, and fees are not the only reason that drives them away.

Stigma

The main obstacle to accessing psychological health services is commonly believed to be the stigma surrounding it. I spoke to David Blowers, who is a UKCP registered psychotherapist working as a student counsellor at Oxford Brookes University and an assistant tutor at Metanoia Institute. He explains that therapy is not always an option for those coming from ‘certain cultures where they are strongly discouraged from discussing problems outside the family or admitting to issues around mental health’, giving the example of ‘men raised with rigid gender expectations’.

When I asked him what he thinks about the AI therapy alternative, his response was positive. ‘It's convenient’, he says. ‘You could probably talk to the bot at any time. If you feel fine sometimes and dreadful at others, it might be useful to just use it when you need it’.

He also argued for the benefits of AI therapy to overcome the effects of stigma. ‘There will be an appeal for people from oppressed demographics to work with bots instead of risking working with therapists who may lack awareness around racism, cissexism, ableism and so on’, he said. He argued for the immediate advantage of it, explaining that ‘it could be particularly appealing for people who are ashamed about the topic they need therapy for’.

A new study by the World Health Organization (WHO) Europe notes the use of AI in the therapy (well-being) industry has grown significantly in recent years. WHO recognises the need for innovation in predictive analytics for better health, with the potential to revolutionise ‘planning of mental health services’ and ‘identifying and monitoring mental health problems in individuals and populations’.

However, when it comes to offering diagnosis, David reminds us that bots are not often reliable. ‘AI could get very clever at helping people make approachable modifications to their behaviour or suggesting different ways to think about things, as with CBT’, he explains. Despite all that, they would need to ‘be able to escalate to 24/7 human backup in case of suicidality. It may be tricky for people on the edge of traumatic hyperarousal’, he concludes.

The possibility of using AI therapy is especially important to consider in the UK, as the government's five-year plan to ensure funding and workforce for mental health services is unsuccessful, and the number of people struggling with mental health problems is increasing. The National Audit Office argued that there will still be ‘sizeable treatment gaps’ if further action is not taken.

‘It's also not conceptually that different from current online therapy services, guided self-help, psychoeducation, computerised CBT and so on’, David argues.

Testing ChatGPT out

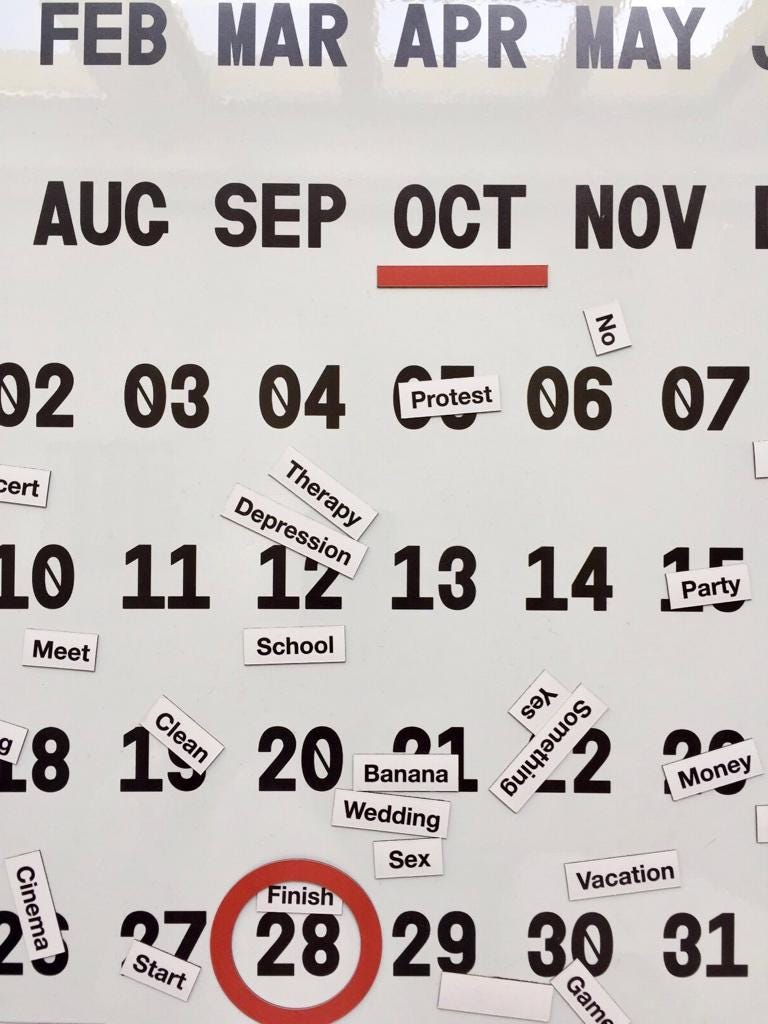

I tested this theory by using ChatGPT to talk about my anxiety during finals season. I first inserted a prompt in an effort to train the AI for what was to come. From there, the conversation ran smoothly, with the bot asking me questions and offering specified advice.

‘I’m here to help you in any way I can’, my new therapist said. In retrospect, the consultation sounded and felt similar to CBT I’ve carried out before.

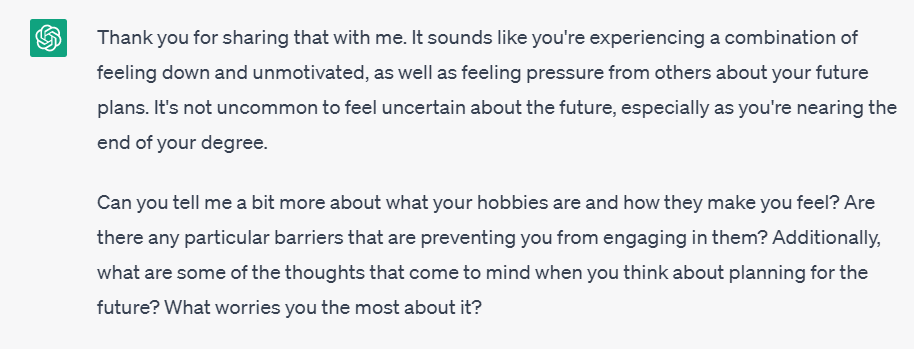

In general, the bot gave me perspective on my thinking process, made me reflect upon my feelings, offering me reassurance every step of the way. With every response, follow-up questions were used by the AI tool, in order to get to know my situation in-depth.

Overall, although the advice I received sounded a bit too general, it helped me dial down my anxiety almost every time.

So is ChatGPT effective?

The short answer to that is yes. After using the tool daily for almost a week, I think it’s safe to say it proved to be more useful than I would’ve initially thought. Not only was it free, but it also was accessible. I felt like I had a safe space to vent about my emotions to someone (or something) that would actively listen to me - without being a burden to my friends about it.

One thing that felt different was the lack of empathy. At times, the responses I received truly felt like they were computer programmed. David explains that ‘therapists can work through intuition’, giving very personalised advice. ‘The relationship between therapist and client is at the heart of what makes therapy successful’, he explains. However, the relational aspect of therapy gets lost when seeking help from AI.

‘It could make people with relational difficulties (e.g. loneliness, alienation or psychosis) feel worse to know that the 'person' they are pouring their heart out to is merely a computer program - that could make the world seem even more uncaring.’

Could AI replace therapists’ jobs?

The general perception could lead to a negative answer. ‘I think to an extent it can replace some of the functions of therapy more than therapists would like to imagine’, David says. Yet, ‘it can't replace the experience of sitting in the same room in a healing relationship with a real, spontaneous, irreducibly other, flesh-and-blood person who cares about you, understands you and is real with you’, he added.

As bots become more prevalent, the distinction between human and robot chat agents may matter less to people in the future. One thing to keep in mind, for now, is that no matter how helpful, AI bots are not built to operate as therapists and diagnose you - yet. Much like David pointed out,

‘If we lift our heads up from the paradigm of individual therapy and imagine a world in which people commonly alleviate their emotional distress for the long term by frequently telling a computer program about their troubles, then we would have to ask some questions about our society - but they are questions we should be asking already.’

To find out more, check out the video below on the topic.

If you're struggling with your mental health, there's no shame in reaching out for help. Access help through the NHS talking therapies service, support groups (Anxiety UK, Mind, Rethink Mental Illness) or look for the nearest facility here. You can also access the NHS self-help page here.

Nice writeup! I actually built a free journalling assistant for Obsidian, also called ChatCBT :)

If interested, check out the demos in the "Learn more" tab here: https://obsidian.md/plugins?search=chatcbt

All conversations are stored locally on your computer as files. Under the hood, you have the option to use ChatGPT, or a 100% local (private!) model of your choice since I know its reassuring to be able to have these conversations in private.